How to See

Max Oullette-Howitz / 2019

Yale School of Architecture

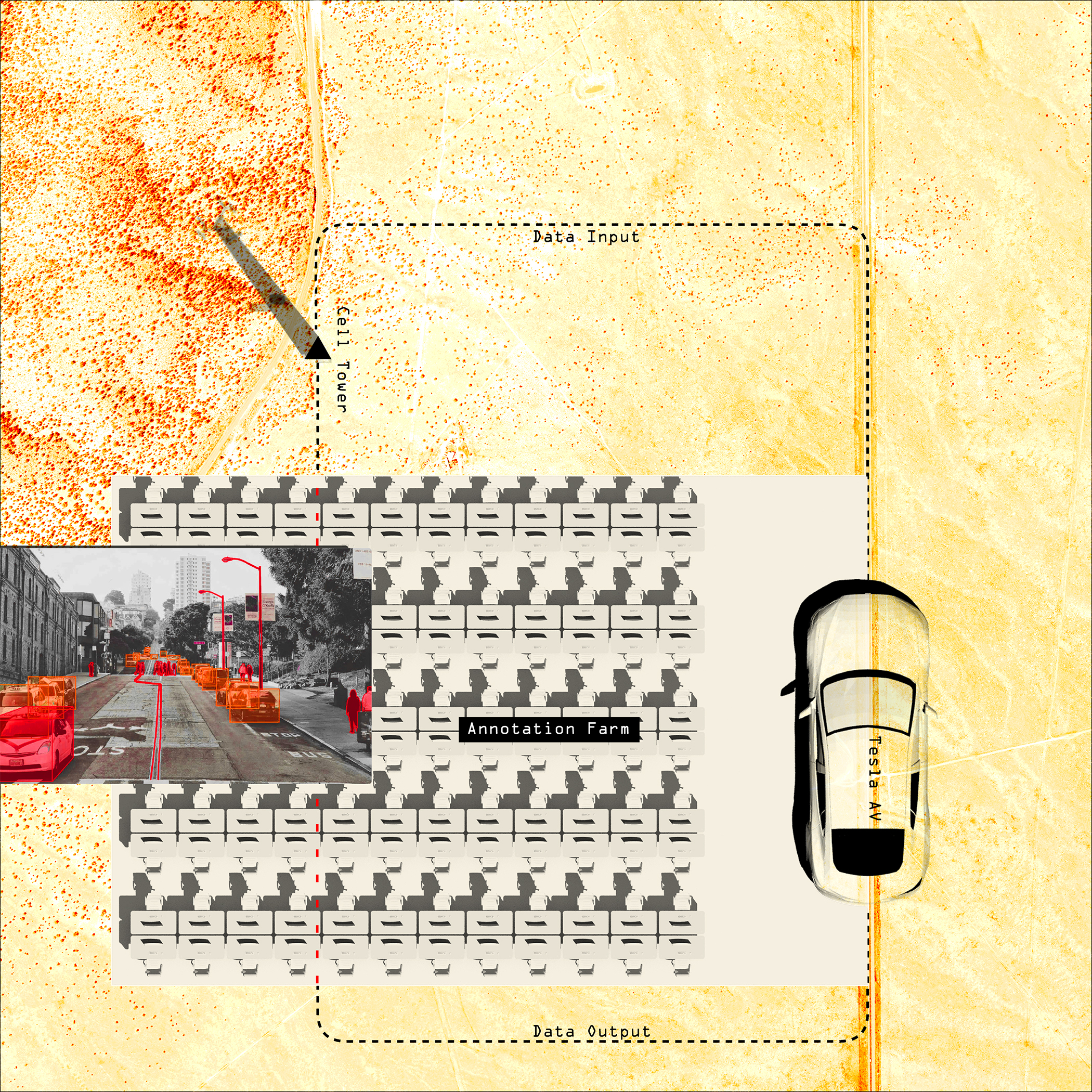

Before autonomous vehicles can be deployed at scale, someone needs to teach them how to see. Right now, the most effective way to do this is through human annotation. With a robust, diverse, and high quality set of data—and an army of annotators—it is possible to teach a car to recognize and respond to its environment (Fig. 1). The training of neural networks is not limited to the autonomous vehicle field but this is the first large scale application of such networks that is likely to radically change and potentially save the lives of a significant number of people. HOW TO SEE explores the relationship between these systems and the humans powering their perception.

Fig. 1

Fig. 1 Fig. 2

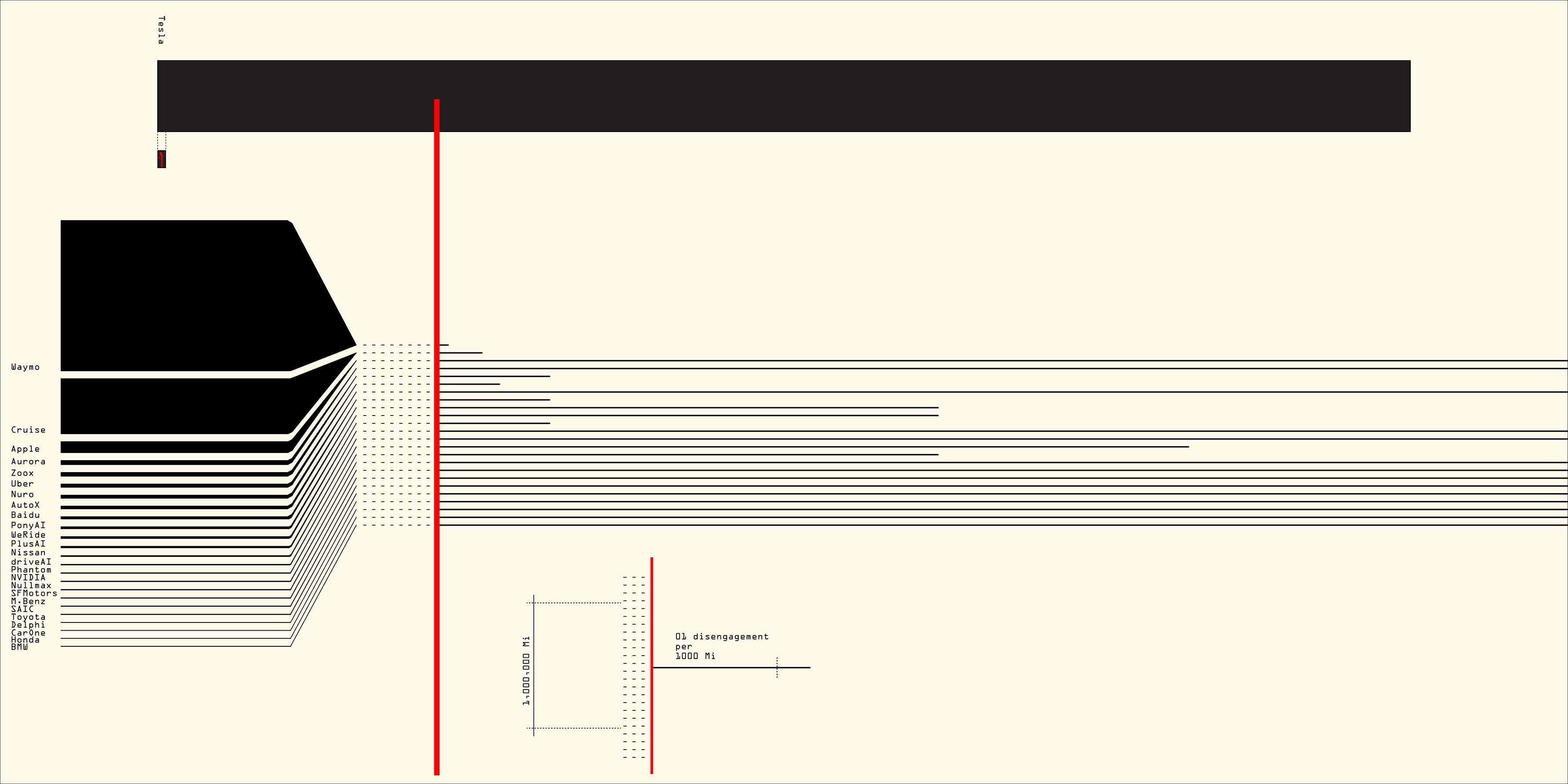

Fig. 2The graph (Fig. 2) above shows the manual disengagements performed by human intervention for multiple autonomous vehicle manufacturers and operators.

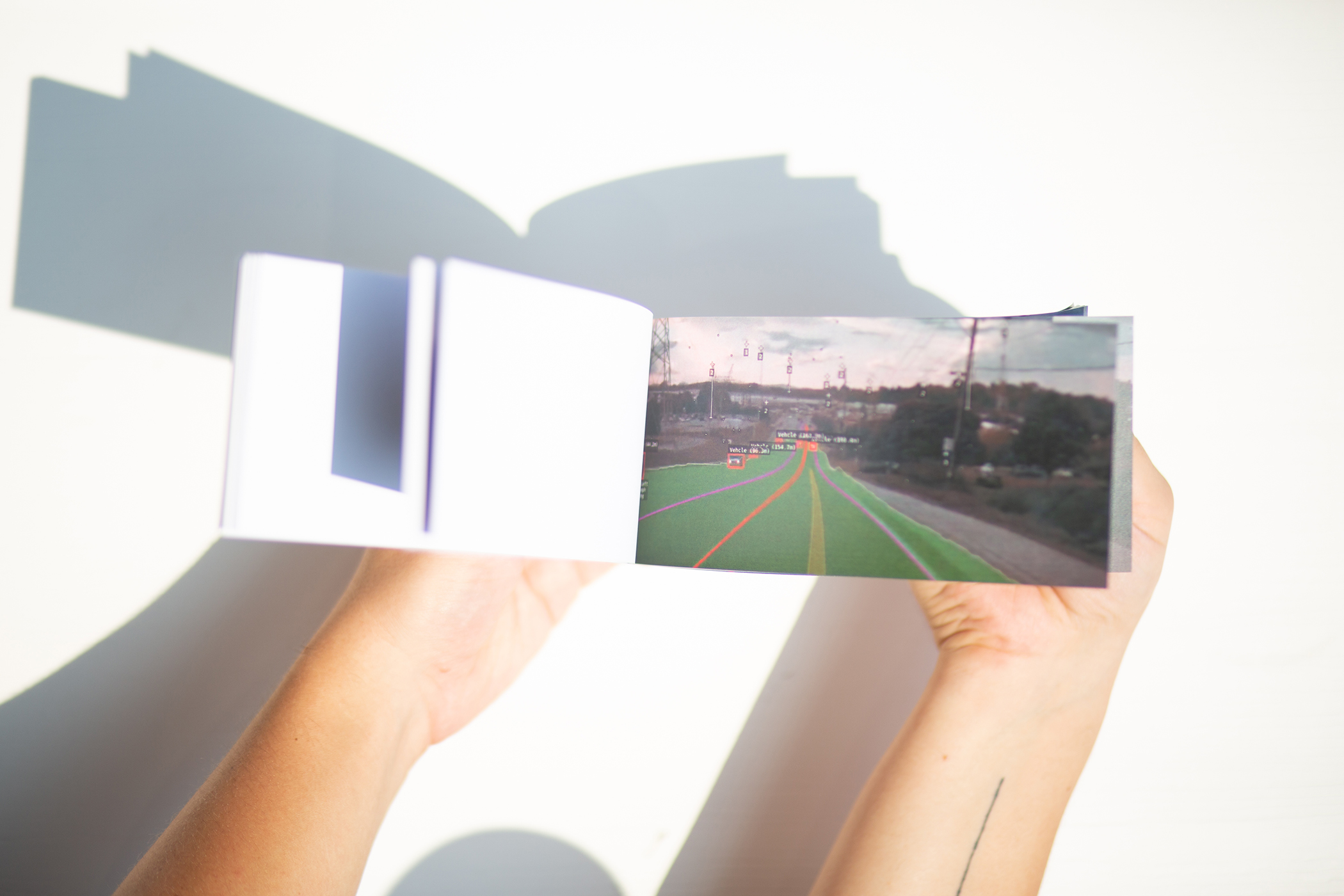

Fig. 3

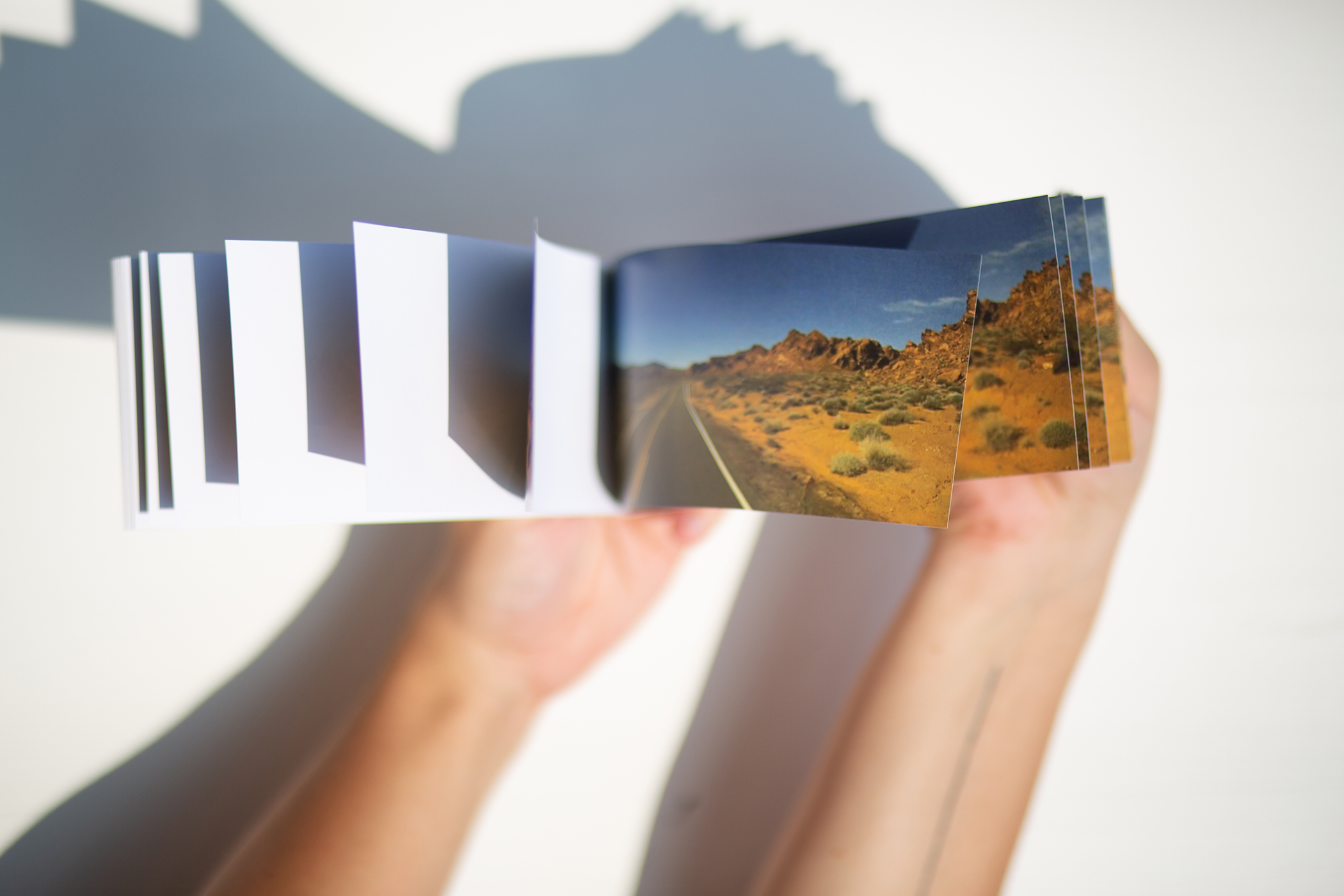

Fig. 3Part of this research also includes flipbooks (Fig. 3) that depict how a Tesla (Fig. 4) or Waymo vehicle “sees” compared to how a human sees (Fig. 5) under similar driving conditions.

Fig. 4

Fig. 4 Fig. 5

Fig. 5